Warning to readers: this article is long and rambling, like most articles by the same author

Once upon a time, I wrote a very simple command-line load testing tool in C. I called it "myload", partly because it was written by, well...myself, and partly as an allusion to MySQL (this was back in the days when MySQL ruled and I had yet to start using PostgreSQL).

As far as load testing tools go, myload wasn't fantastic. It performed "ok", being written in C and all, and it actually had quite good support for all parts of HTTP, thanks to the awesome libcurl library. But it had no advanced user simulation functionality - it could only read a list of static URLs and then request them, one by one, until it was out of URLs.

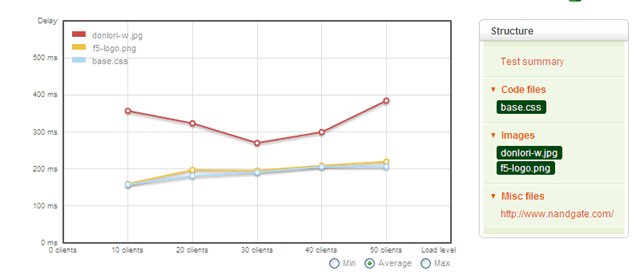

It also had no support for ramping up or down load, in terms of concurrent virtual, simulated users (VUs). Load Impact's online service nevertheless supported ramp-up tests using myload as load generator, and it did so by simply starting myload several times in succession, with different VU levels each time. In effect, performing multiple, short-duration load tests. Here is what a response time chart looked like back then:

(thanks to F5 and Lori MacVittie from whose blog article I stole this screenshot)

Those were the days! Only five distinct VU levels in the chart, with max, min and average response times reported on each level. I won't get into all the things that are less than fantastic with this approach. Let's just say there was room for improvement.

Missing functionality?

A question I think is interesting to ask though, is why I chose to write my own tool in the first place? Why not use the existing Jmeter or the Grinder, which were available back then and had had lots of development put into them? Many would probably assume the NIH (Not Invented Here) syndrome, but I actually had a couple of real reasons. Firstly, I'm not a Java person and both Jmeter and the Grinder are Java applications. I didn't like having to use a JRE to do something. My mind conveniently tends to suppress boring memories, so I don't remember what it was like to run Java 1.x applications, but I'm sure it was nowhere near as fun as running a Java app today, and it's really not much fun today either!

A bigger reason, however, was that at the time, the existing tools lacked functionality I thought was important when load testing. Specifically, both Jmeter and the Grinder equated one VU (virtual, simulated user) with one TCP connection - i.e. one VU only had access to one TCP connection over which to issue requests to the target system. Given that most people at the time wanted to simulate humans that used web browsers to connect to a site, this was not very realistic. It meant that people were configuring their load tests to stress a site with, say, 100 VU and then they were thinking "that went well. So my site can handle 100 simultaneous users, great!". In reality, though, the site may have been able to handle only a fraction of that number of real, human users, because browsers used (and still do) multiple, concurrent TCP connections to download several things in parallel from the server, putting a lot more stress on the system than 1 VU in Jmeter would.

And yeah, I could have dug in and contributed a patch for Jmeter or Grinder to make their VUs use multiple connections, but given that I don't like Java much at all, and I am not very proficient in it, it would have been both time consuming and extremely painful for me to do so. Hacking together a simple tool in C based on libcurl was a much faster way to get the functionality I wanted to the market, as well as much more fun. So we created myload.

Reinventing the wheel gets better the second time you do it

After a while of using myload, however, we got tired of its limitations and our CTO, Robin Gustafsson, wrote the next-generation tool compatible with myload, but which also introduced a real scripting language (Lua). This tool was given the internal name "rload" (where the "r" could mean "our", instead of the previous "my". Less possessive, more socialist-like. Of course, "r" could also stand for "Robin").

We wrote rload ourselves for two reasons:

- because Jmeter, Grinder and maybe one or two more tools that existed back then still had not invented the multiple-connections-per-VU functionality we liked to brag about in our online service and

- we wanted it to be backwards compatible with myload, to make the transition easier for us. So, yet another tool was born.

rload was pretty good though. It was also written in C and used libcurl, but had real scripting support using Lua and many features not found in any other tool at the time. Load Impact has been using rload ever since, updating it and adding new features regularly. Today, it is a competent tool, but development has been completely driven by the needs of the loadimpact.com online service, which means there are lots of features no-one else would need, the command-line UX is inconsistent, the onboarding would be painful for new users if we released it publicly (rather than just using it behind the scenes at loadimpact.com).

So we have never released either myload (scary thought) or rload (slightly less scary) to the general public, but only used them in the loadimpact.com online service, but when we sat down and discussed what should come next, after rload, we decided it was time for a different approach.

We realized that ever since we started dabbling in load testing as a service, almost 10 years ago now, the publicly available load testing tools have been more or less...bad. Let's look at load testing history a bit here.

The dark ages

True load testing veterans in the 90's and 00's used commercial tools like HP (formerly Mercury) Loadrunner, Borland Silkperformer and a few lesser known ones. Few real contenders that were free/open source tools existed back in the 90's, but in the 00's we had some, like OpenSTA and Jmeter. And yes, there were a couple of very simple tools, like Apachebench or HTTPerf, but I'll exclude them here because it's really not fair to compare them directly to advanced tools like Loadrunner.

At the time, the commercial tools were a lot better than the FOSS ones though. I have tried using OpenSTA and Jmeter in the early/mid 00's and as my memory of this is kind of foggy, it was definitely painful.

But things changed. Jmeter got better. the Grinder showed up as a promising tool. Tsung came a bit later I think. There were also several decent commercial tools like WebLOAD, Neoload, Microsoft's Visual Studio VSTS load test, Rational performance tester etc.

Page loads everywhere

Still, all tools were basically the same, catering to the same use case: performance test experts who wanted to design very complex test scenarios that as accurately as possible mimicked real traffic onto a web site. API testing was rare - load testing was more about simulating a browser that fetched HTML, CSS, Javascript and a ton of images in order to display a web UI to a user - the archetypical "page load".

Load tests would be complex sequences of page loads. With dynamic data, for sure, but the whole traffic pattern simulated traffic from browsers rendering pages, nothing else. User scenario recording features were created around this use case, as were any scripting APIs. Results output was organized to display "page load times" and various components of the same.

So all "serious" load testing tools were geared towards this use case. They were also geared towards performance test experts who didn't mind running bloated (often) Windows software with complex point-and-click GUIs. The more complex the tool, the better actually, as complexity provided a knowledge moat that meant job security and better pay for the expert.

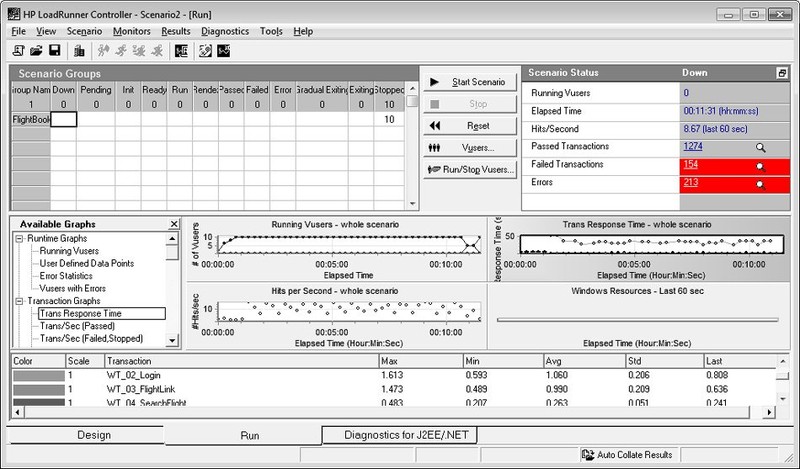

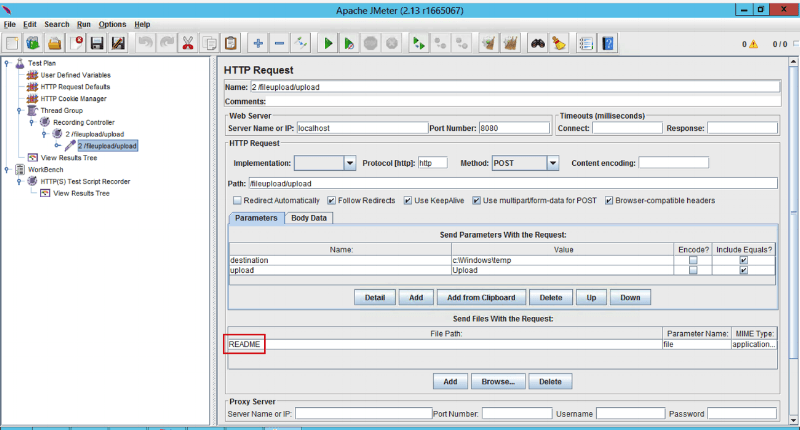

To illustrate what I mean, here are a couple of screenshots from Loadrunner and Jmeter - both complex, GUI-based tools aimed at performance test experts who want to simulate page loads:

Loadrunner

Jmeter

AJAX, APIs, Mobile

Then the world started changing. Page loads were not the only possible traffic scenario anymore. AJAX began the change, with client-side Javascript suddenly loading things "whenever", which made life much more complicated to any tools that tried to look at traffic and figure out when a "page" had "loaded". REST APIs started to become very popular as a way for the Javascript to fetch the data it needed fetching. Mobile happened, and single-page apps became a thing. More and more traffic became API-driven and asynchronous.

The load testing tools were slow to change and support this new world, however. I would argue that the commercial tools did not change much at all. They continued catering to the old guard: the performance test experts working on the complex "web site" load tests that simulated a bunch of page loads. Performing a load test was still a difficult, time-consuming and very manual effort that often required specialists and a lot of resource allocation.

At the same time, more and more developers were writing and automating test cases. The DevOps trend had started, and most load testing tools started to feel a bit ancient.

In my mind, a gap began to appear here, between market need and available options.

The renaissance

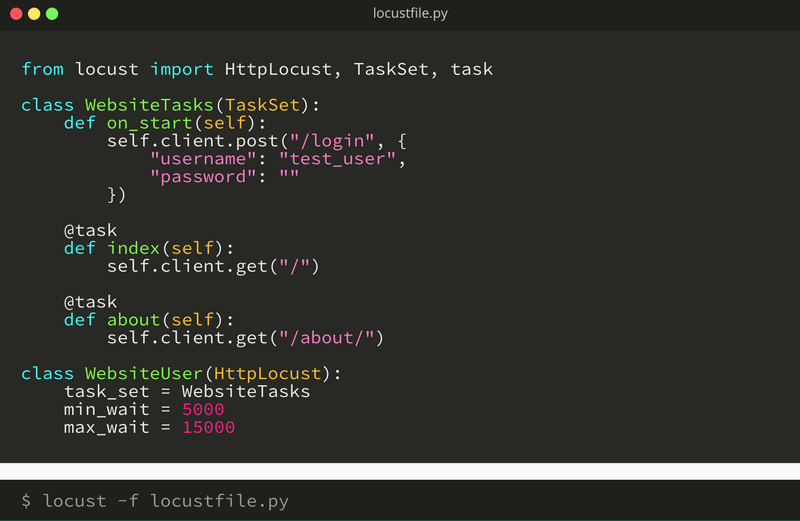

Then around 2010 I think, another breed of tools started to show up, that seemed to respond to the needs of the growing developer load testing market. Locust was maybe the first, but then came Artillery and Vegeta. There are probably others I have missed. These tools - especially Artillery and Vegeta - were much more developer-focused. They offered good and modern command-line UIs, like many developer tools today do, would output results in formats suitable for parsing by software, not humans, and they worked well in an automated test setting (especially Artillery). They are all free, open source software that developers tend to prefer. Here is a screenshot of how to use Locust:

Locust UX

This UX is clearly more developer-oriented and modern. No bloated GUI application to install. In the case of Locust there is a simple, built-in web UI, but later tools like Artillery has completely done away with GUIs and are more cleanly focused on automated testing.

These tools were a big step forward, and perhaps the age of the tool determines how good the product-market-fit is for the DevOps/automated test use case. Locust is the oldest and while it offers real scripting in Python, which we think developers really like, its command-line UX, results output options and performance still needs improvement. Vegeta has better, but not great, command-line UX and lacks scripting. Artillery has the best command-line UX and in general the best automation support, but suffers from lack of scripting ability and low performance. If you're interested in the relative merits of various tools, I wrote an open source load testing tool review article earlier.

Reinventing the wheel gets better every time you do it

So, at Load Impact we had for a long time been discussing the next step after we retired rload. Should we go for one of the available tools out there? Earlier we were contemplating using Jmeter, but it always felt like it would be a step backward. Most tools were too simple and lacked any kind of functionality to run logic in your test scenario, and when it came to executing actual script code, only Locust and the Grinder could do that (well, and Wrk but then with a not very user-friendly API).

We also wanted to support some scripting language that was more common than the one we currently offered in our online service - Lua. Lua has gained traction the last couple of years, to be honest, and may not at all be a bad choice for a load test scenario scripting language in the future. It has most features you need, it is small and fast, and the VM uses little memory. But compared to e.g. Javascript it is still a tiny language in terms of adoption. Many more people are familiar with Javascript, and there are tons more JS libraries out there, that can be used in a tool that supports JS-based scripting.

So we invented the wheel again. Or rather, we reinvented the Locust/Artillery/Vegeta wheel this time. (Note that I am not including Gatling in this "new breed" of tools, because I think Gatling is a new and better Jmeter, but just like Jmeter it is not very good at catering to developers who want to code their tests and then automate them).

Enter k6

We started work on our new tool k6 in 2016 and released it publicly in 2017. The aim is to provide a high-quality load testing tool for developers and automated load testing. The selling points are:

- Scenario scripting in a real (and ubiquitous) language - Javascript - so that developers can write their test cases in code

- Simple, intuitive and consistent command line UX

- Output options useful for test automation (plaintext, JSON, InfluxDB, pass/fail results to CI system via exit codes)

- Good performance and measurement accuracy

- Purpose-built scripting API that is intuitive and works for both functional and performance tests

k6 is free and open source software, available on Github. It is written in Go, which provides speed and good concurrency support on multiple platforms. It integrates the Goja Javascript engine and supports ES6 through BabelJS.

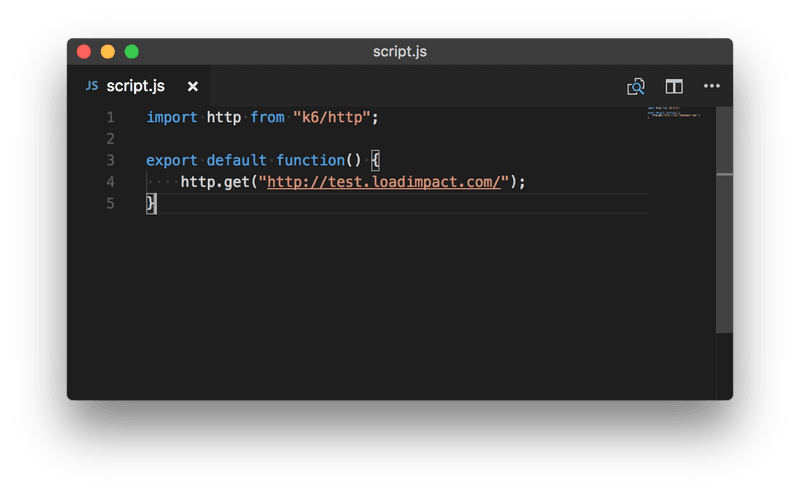

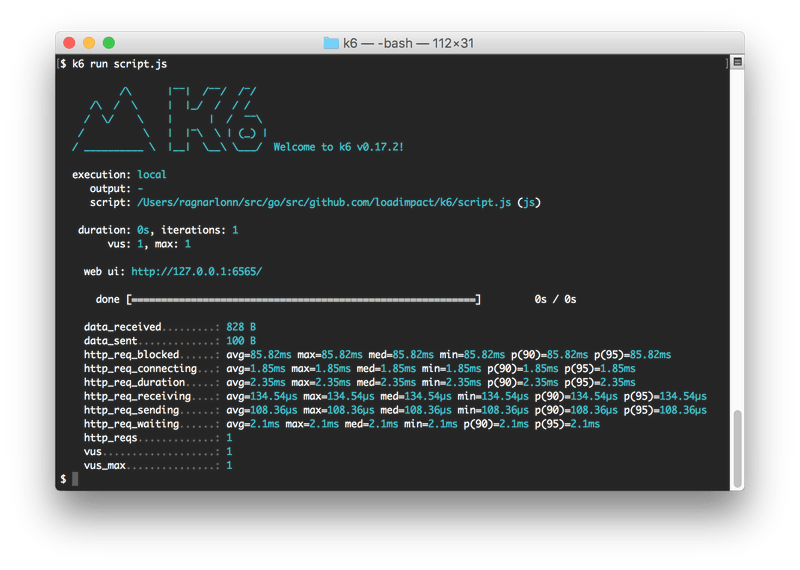

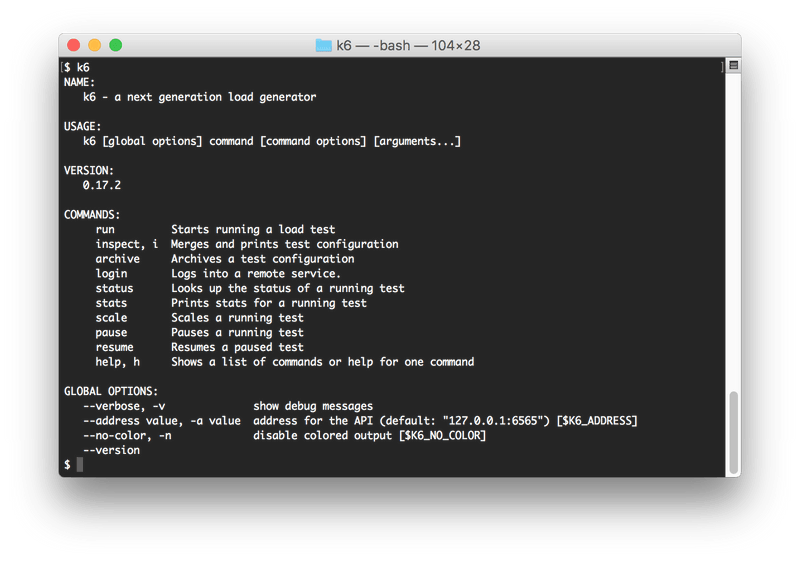

k6 still has some way to go to become the perfect tool for developers doing automated load testing, but we believe it is much better than anything else currently available, both free and commercial options included. Here are a couple of k6 screenshots:

a simple k6 script

executing the script

running without params shows the built-in help

Our hope is that k6 will be the start of the next breed of developer-centric load testing tools. We think there is an emerging market that is completely under-served with decent tool options today, and that while this market may still not be huge, it is growing fast. We want to be a part of that trend. Or maybe it's just an excuse for reinventing wheels - time will tell!